Notebook¶

Authors: Colin Small (crs1031@wildcats.unh.edu), Matthew Argall (Matthew.Argall@unh.edu), Marek Petrik (Marek.Petrik@unh.edu)

Introduction¶

Global-scale energy flow throughout Earth’s magnetosphere is catalyzed by processes that occur at Earth’s magnetopause (MP) in the electron diffusion region (EDR) of magnetic reconnection. Until the launch of the Magnetospheric Multiscale (MMS) mission, only rare, fortuitous circumstances permitted a glimpse of the electron dynamics that break magnetic field lines and energize plasma. MMS employs automated burst triggers onboard the spacecraft and a Scientist-in-the-Loop (SITL) on the ground to select intervals likely to contain diffusion regions. Only low-resolution survey data is available to the SITL, which is insufficient to resolve electron dynamics. A strategy for the SITL, then, is to select all MP crossings. This has resulted in over 35 potential MP EDR encounters but is labor- and resource-intensive; after manual reclassification, just ∼ 0.7% of MP crossings, or 0.0001% of the mission lifetime during MMS’s first two years contained an EDR.

In this notebook, we develop a Long-Short Term Memory (LSTM) neural network to detect magnetopause crossings and automate the SITL classification process. An LSTM developed with this notebook has been implemented in the MMS data stream to provide automated predictions to the SITL.

This model facilitates EDR studies and helps free-up mission operation costs by consolidating manual classification processes into automated routines.

Authors’ notes:

This notebook was developed after the development of the original model in use at the SDC. We have tried our best to replicate the development steps and hyperparameters of that model, but we cannot guarantee that models developed with this notebook will exactly match the performance of the original.

This notebook was designed on, and is best run on, Google Colab. It must either be run on Colab or on a machine with an NVIDIA GPU and cuDNN installed. If your machine does not have an NVIDIA GPU, does not have cuDNN installed, or if you run into issues running this notebook yourself, please open the notebook in Google Colab, which provides you with a virtual GPU to run the notebook. (If TF Keras is unable to identify a GPU to run on, make sure the notebook is set to use one by clicking the “Runtime” tab in the top menu bar, selecting “Change runtime type”, selecting “GPU” in the dropdown menu under “Hardware accelerator”, and clicking save. Colab will refresh your timetime, and you will need to re-run all cells.):

Import Libraries¶

To start, we import the neccesary libraries for this notebook.

!pip install nasa-pymms

Requirement already satisfied: nasa-pymms in /usr/local/lib/python3.6/dist-packages (0.3.1)

Requirement already satisfied: tqdm>=4.36.1 in /usr/local/lib/python3.6/dist-packages (from nasa-pymms) (4.41.1)

Requirement already satisfied: matplotlib>=3.1.1 in /usr/local/lib/python3.6/dist-packages (from nasa-pymms) (3.2.2)

Requirement already satisfied: requests>=2.22.0 in /usr/local/lib/python3.6/dist-packages (from nasa-pymms) (2.23.0)

Requirement already satisfied: scipy>=1.4.1 in /usr/local/lib/python3.6/dist-packages (from nasa-pymms) (1.4.1)

Requirement already satisfied: cdflib in /usr/local/lib/python3.6/dist-packages (from nasa-pymms) (0.3.19)

Requirement already satisfied: numpy>=1.8 in /usr/local/lib/python3.6/dist-packages (from nasa-pymms) (1.18.5)

Requirement already satisfied: kiwisolver>=1.0.1 in /usr/local/lib/python3.6/dist-packages (from matplotlib>=3.1.1->nasa-pymms) (1.2.0)

Requirement already satisfied: cycler>=0.10 in /usr/local/lib/python3.6/dist-packages (from matplotlib>=3.1.1->nasa-pymms) (0.10.0)

Requirement already satisfied: pyparsing!=2.0.4,!=2.1.2,!=2.1.6,>=2.0.1 in /usr/local/lib/python3.6/dist-packages (from matplotlib>=3.1.1->nasa-pymms) (2.4.7)

Requirement already satisfied: python-dateutil>=2.1 in /usr/local/lib/python3.6/dist-packages (from matplotlib>=3.1.1->nasa-pymms) (2.8.1)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.6/dist-packages (from requests>=2.22.0->nasa-pymms) (2020.6.20)

Requirement already satisfied: chardet<4,>=3.0.2 in /usr/local/lib/python3.6/dist-packages (from requests>=2.22.0->nasa-pymms) (3.0.4)

Requirement already satisfied: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /usr/local/lib/python3.6/dist-packages (from requests>=2.22.0->nasa-pymms) (1.24.3)

Requirement already satisfied: idna<3,>=2.5 in /usr/local/lib/python3.6/dist-packages (from requests>=2.22.0->nasa-pymms) (2.10)

Requirement already satisfied: attrs>=19.2.0 in /usr/local/lib/python3.6/dist-packages (from cdflib->nasa-pymms) (20.2.0)

Requirement already satisfied: six in /usr/local/lib/python3.6/dist-packages (from cycler>=0.10->matplotlib>=3.1.1->nasa-pymms) (1.15.0)

from pathlib import Path

from sklearn import preprocessing

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout, LSTM, CuDNNLSTM, BatchNormalization, Bidirectional, Reshape, TimeDistributed

from tensorflow.keras.callbacks import TensorBoard, ModelCheckpoint

from matplotlib import pyplot

from sklearn.metrics import roc_curve, auc, confusion_matrix

from keras import backend as K

from pymms.sdc import mrmms_sdc_api as mms

import keras.backend.tensorflow_backend as tfb

import tensorflow as tf

import numpy as np

import pandas as pd

import tensorflow as tf

import matplotlib.pyplot as plt

plt.rcParams.update({'font.size': 18})

import datetime as dt

import os

import time

import sklearn

import scipy

import pickle

import random

import requests

TensorFlow 1.x selected.

Using TensorFlow backend.

Creating root data directory /root/data/mms

Creating root data directory /root/data/mms/dropbox

Download, Preprocess, and Format MMS Data¶

After installing and importinng the neccesary libraries, we download our training and validation data.

!wget -O training_data.csv https://zenodo.org/record/3884266/files/original_training_data.csv?download=1

!wget -O validation_data.csv https://zenodo.org/record/3884266/files/original_validation_data.csv?download=1

--2020-09-16 16:36:14-- https://zenodo.org/record/3884266/files/original_training_data.csv?download=1

Resolving zenodo.org (zenodo.org)... 188.184.117.155

Connecting to zenodo.org (zenodo.org)|188.184.117.155|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 447842635 (427M) [text/plain]

Saving to: ‘training_data.csv’

training_data.csv 100%[===================>] 427.10M 7.45MB/s in 24s

2020-09-16 16:36:39 (18.0 MB/s) - ‘training_data.csv’ saved [447842635/447842635]

--2020-09-16 16:36:39-- https://zenodo.org/record/3884266/files/original_validation_data.csv?download=1

Resolving zenodo.org (zenodo.org)... 188.184.117.155

Connecting to zenodo.org (zenodo.org)|188.184.117.155|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 90314951 (86M) [text/plain]

Saving to: ‘validation_data.csv’

validation_data.csv 100%[===================>] 86.13M 8.10MB/s in 10s

2020-09-16 16:36:49 (8.45 MB/s) - ‘validation_data.csv’ saved [90314951/90314951]

After downloading the training and validation data, we preprocess our training data in preparation for training the neural network.

We first load the data we downloaded above. The data is a table of measurements from the MMS spacecraft, where each row represents individual measurements taken at a given time and where each column represents a feature (variable) recorded at that time. There is an additional column representing the ground truths for each measurement (whether this measurement was selected by a SITL or not). Then, we will adjust the formatting and datatypes of several of the columns and sort the data by the time of the measurement.

mms_data = pd.read_csv('training_data.csv', index_col=0, infer_datetime_format=True,

parse_dates=[0])

mms_data[mms_data['selected'] == False]

| mms1_des_energyspectr_omni_fast_0 | mms1_des_energyspectr_omni_fast_1 | mms1_des_energyspectr_omni_fast_2 | mms1_des_energyspectr_omni_fast_3 | mms1_des_energyspectr_omni_fast_4 | mms1_des_energyspectr_omni_fast_5 | mms1_des_energyspectr_omni_fast_6 | mms1_des_energyspectr_omni_fast_7 | mms1_des_energyspectr_omni_fast_8 | mms1_des_energyspectr_omni_fast_9 | mms1_des_energyspectr_omni_fast_10 | mms1_des_energyspectr_omni_fast_11 | mms1_des_energyspectr_omni_fast_12 | mms1_des_energyspectr_omni_fast_13 | mms1_des_energyspectr_omni_fast_14 | mms1_des_energyspectr_omni_fast_15 | mms1_des_energyspectr_omni_fast_16 | mms1_des_energyspectr_omni_fast_17 | mms1_des_energyspectr_omni_fast_18 | mms1_des_energyspectr_omni_fast_19 | mms1_des_energyspectr_omni_fast_20 | mms1_des_energyspectr_omni_fast_21 | mms1_des_energyspectr_omni_fast_22 | mms1_des_energyspectr_omni_fast_23 | mms1_des_energyspectr_omni_fast_24 | mms1_des_energyspectr_omni_fast_25 | mms1_des_energyspectr_omni_fast_26 | mms1_des_energyspectr_omni_fast_27 | mms1_des_energyspectr_omni_fast_28 | mms1_des_energyspectr_omni_fast_29 | mms1_des_energyspectr_omni_fast_30 | mms1_des_numberdensity_fast | mms1_des_bulkv_dbcs_fast_0 | mms1_des_bulkv_dbcs_fast_1 | mms1_des_heatq_dbcs_fast_0 | mms1_des_heatq_dbcs_fast_1 | mms1_des_temppara_fast | mms1_des_tempperp_fast | mms1_des_prestensor_dbcs_fast_x1_y1 | mms1_des_prestensor_dbcs_fast_x2_y1 | ... | mms1_dis_energyspectr_omni_fast_29 | mms1_dis_energyspectr_omni_fast_30 | mms1_dis_numberdensity_fast | mms1_dis_bulkv_dbcs_fast_0 | mms1_dis_bulkv_dbcs_fast_1 | mms1_dis_heatq_dbcs_fast_0 | mms1_dis_heatq_dbcs_fast_1 | mms1_dis_temppara_fast | mms1_dis_tempperp_fast | mms1_dis_prestensor_dbcs_fast_x1_y1 | mms1_dis_prestensor_dbcs_fast_x2_y1 | mms1_dis_prestensor_dbcs_fast_x2_y2 | mms1_dis_prestensor_dbcs_fast_x3_y1 | mms1_dis_prestensor_dbcs_fast_x3_y2 | mms1_dis_prestensor_dbcs_fast_x3_y3 | mms1_dis_temptensor_dbcs_fast_x1_y1 | mms1_dis_temptensor_dbcs_fast_x2_y1 | mms1_dis_temptensor_dbcs_fast_x2_y2 | mms1_dis_temptensor_dbcs_fast_x3_y1 | mms1_dis_temptensor_dbcs_fast_x3_y2 | mms1_dis_temptensor_dbcs_fast_x3_y3 | mms1_dis_temp_anisotropy | mms1_dis_scalar_temperature | mms1_dis_N_Q | mms1_dis_Vz_Q | mms1_dis_nV_Q | mms1_afg_srvy_dmpa_Bx | mms1_afg_srvy_dmpa_By | mms1_afg_srvy_dmpa_Bz | mms1_afg_srvy_dmpa_|B| | mms1_afg_magnetic_pressure | mms1_afg_clock_angle | mms1_afg_Bz_Q | mms1_edp_x | mms1_edp_y | mms1_edp_z | mms1_edp_|E| | mms1_temp_ratio | mms1_plasma_beta | selected | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Epoch | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 2017-01-01 01:49:08.736524 | 172560370.0 | 141811650.0 | 115564310.0 | 103489660.0 | 109156240.0 | 138017710.0 | 199794740.0 | 313011970.0 | 495821400.0 | 7.675890e+08 | 1.102645e+09 | 1.355750e+09 | 1.283706e+09 | 876457860.0 | 437018100.0 | 178497440.0 | 61324772.0 | 17706034.0 | 5950672.0 | 1453186.0 | 1230702.8 | 1290793.0 | 1361365.00 | 1443553.0 | 1538581.10 | 1600447.1 | 1693706.6 | 1860683.8 | 1821310.1 | 1785482.6 | 2143655.0 | 47.289574 | -64.066220 | -59.850384 | 0.067417 | -0.033087 | 68.663414 | 69.643720 | 0.530681 | 0.000513 | ... | 1847614.4 | 1368769.60 | 45.166210 | -65.301070 | -75.960210 | -0.017214 | -0.054081 | 496.30145 | 558.62010 | 4.105384 | 0.039305 | 3.979379 | 0.077544 | 0.372712 | 3.591420 | 4.105384 | 0.039305 | 3.979379 | 0.077544 | 0.372712 | 3.591420 | -0.111558 | 537.84720 | 0.000000 | 0.000000 | 5217.443037 | -0.026197 | 33.162117 | -39.656940 | 51.695260 | 2.126628e+09 | 1.571586 | 1.467709 | -0.862275 | 1.665013 | 0.827068 | 2.049348 | 1.0 | 524.89600 | False |

| 2017-01-01 01:49:13.236552 | 160474430.0 | 134115120.0 | 113082936.0 | 106597736.0 | 118977260.0 | 157128700.0 | 234196500.0 | 368190240.0 | 588617700.0 | 9.143196e+08 | 1.280595e+09 | 1.386385e+09 | 1.020292e+09 | 546922600.0 | 238565300.0 | 95543030.0 | 34221956.0 | 10795790.0 | 3346869.8 | 1132098.6 | 1230606.6 | 1290689.8 | 1370548.60 | 1217164.6 | 1303508.00 | 1396131.6 | 1496823.2 | 1930027.9 | 1878429.5 | 675747.1 | 2085773.5 | 47.806107 | -34.529873 | -128.342510 | 0.091984 | -0.000412 | 59.059840 | 62.034630 | 0.473132 | 0.000187 | ... | 1385553.0 | 1053661.50 | 46.629120 | -60.415287 | -118.971080 | -0.003761 | 0.163968 | 415.75192 | 523.83150 | 3.883746 | 0.074054 | 3.943084 | 0.049551 | 0.003202 | 3.105979 | 3.883746 | 0.074054 | 3.943084 | 0.049551 | 0.003202 | 3.105979 | -0.206325 | 487.80496 | 1.097182 | 39.256162 | 6031.144668 | 3.003209 | -17.920328 | -18.677692 | 26.057890 | 5.403419e+08 | -1.404753 | 2.396316 | -0.572842 | 0.670425 | -0.570290 | 1.050165 | 1.0 | 929.00616 | False |

| 2017-01-01 01:49:17.736573 | 143446660.0 | 120096200.0 | 103888320.0 | 99487384.0 | 114008050.0 | 152906400.0 | 228558100.0 | 359750530.0 | 572418500.0 | 8.799912e+08 | 1.192516e+09 | 1.208780e+09 | 8.369016e+08 | 437589570.0 | 191214960.0 | 78865330.0 | 28987052.0 | 9752265.0 | 3037489.2 | 1132141.2 | 1230653.4 | 1290740.1 | 1361307.60 | 1453176.6 | 1303684.90 | 1417762.9 | 1178981.4 | 1254457.6 | 1834328.2 | 1900604.5 | 1562550.4 | 43.848970 | -17.841991 | -80.636430 | -0.017504 | 0.007353 | 55.005360 | 61.100243 | 0.432611 | 0.002111 | ... | 1216220.0 | 1003711.90 | 42.998802 | -37.589535 | -101.116325 | 0.002284 | 0.062132 | 379.23505 | 442.85294 | 3.202901 | -0.023277 | 2.898829 | 0.074439 | -0.131550 | 2.612594 | 3.202901 | -0.023277 | 2.898829 | 0.074439 | -0.131550 | 2.612594 | -0.143655 | 421.64697 | 1.625556 | 47.437222 | 5204.241455 | 0.250873 | -3.805478 | -25.772310 | 26.052958 | 5.401373e+08 | -1.504967 | 1.586905 | -0.357424 | 0.633575 | -0.160308 | 0.744894 | 1.0 | 1132.09920 | False |

| 2017-01-01 01:49:22.236602 | 143115380.0 | 120483730.0 | 103335910.0 | 99983890.0 | 115342700.0 | 156445900.0 | 236307790.0 | 374869920.0 | 598786900.0 | 9.304425e+08 | 1.259985e+09 | 1.203378e+09 | 7.605617e+08 | 361202240.0 | 147414610.0 | 58348600.0 | 21312232.0 | 7609467.0 | 3276093.5 | 1132188.6 | 1230705.2 | 1290795.8 | 1361368.00 | 1443556.0 | 1548742.10 | 1391640.8 | 1787557.8 | 1927728.0 | 1754844.5 | 1890987.5 | 2347546.8 | 44.116190 | -24.291311 | -51.504208 | -0.010656 | -0.065078 | 56.319736 | 57.496597 | 0.405184 | 0.001348 | ... | 1146827.8 | 996494.75 | 42.281338 | -15.571530 | -88.482050 | -0.039505 | 0.037287 | 350.79077 | 375.92334 | 2.608262 | 0.016238 | 2.484871 | -0.012547 | -0.062605 | 2.376314 | 2.608262 | 0.016238 | 2.484871 | -0.012547 | -0.062605 | 2.376314 | -0.066856 | 367.54580 | 2.437950 | 63.604532 | 4158.129041 | 0.571565 | -26.909445 | -33.616943 | 43.064415 | 1.475799e+09 | -1.549559 | 0.414231 | 1.962965 | 1.813941 | -1.001314 | 2.854163 | 1.0 | 257.55072 | False |

| 2017-01-01 01:49:26.736624 | 154996270.0 | 129635576.0 | 109879810.0 | 104667430.0 | 118939120.0 | 159722750.0 | 240743730.0 | 384687520.0 | 622868740.0 | 9.923178e+08 | 1.407102e+09 | 1.379264e+09 | 8.729052e+08 | 412877600.0 | 165255970.0 | 64202252.0 | 22482496.0 | 7437224.5 | 3127818.0 | 1132103.5 | 1230611.9 | 1290695.2 | 1361258.80 | 1443435.9 | 1492447.10 | 1570121.2 | 1725425.4 | 1605647.4 | 2037316.2 | 1869736.6 | 2320483.0 | 47.787876 | -43.337650 | -68.804990 | -0.138768 | -0.233581 | 56.954014 | 58.321754 | 0.449897 | -0.001409 | ... | 1509011.9 | 1205417.50 | 44.735374 | -29.033060 | -83.403510 | -0.047671 | -0.056385 | 396.54170 | 400.25833 | 2.947306 | 0.007026 | 2.790271 | -0.076785 | -0.311423 | 2.842150 | 2.947306 | 0.007026 | 2.790271 | -0.076785 | -0.311423 | 2.842150 | -0.009286 | 399.01944 | 0.083262 | 57.780416 | 4519.709417 | -1.338079 | -25.596290 | -35.260128 | 43.591710 | 1.512161e+09 | -1.623025 | 0.009667 | 1.674221 | 0.755573 | -0.422870 | 1.884867 | 1.0 | 423.39264 | False |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 2017-01-31 01:59:37.612761 | 195522660.0 | 169047760.0 | 151144740.0 | 155255970.0 | 187461070.0 | 260878460.0 | 391024700.0 | 601519170.0 | 933239230.0 | 1.431671e+09 | 2.001644e+09 | 1.911962e+09 | 9.213409e+08 | 266009100.0 | 70228190.0 | 23561248.0 | 8219571.0 | 3132344.0 | 1069388.6 | 1156007.0 | 1205684.8 | 1285194.1 | 776630.40 | 1443075.8 | 1507529.50 | 1614608.1 | 1747582.8 | 1609458.8 | 2050434.2 | 2227281.8 | 2083288.2 | 66.366800 | -114.178600 | -66.354935 | 0.118533 | -0.167590 | 54.738000 | 49.998760 | 0.542538 | 0.017298 | ... | 1173978.5 | 946332.50 | 46.211296 | -99.599365 | -137.597990 | -0.087668 | -0.111775 | 269.56824 | 291.43158 | 2.067940 | -0.059106 | 2.042181 | 0.032945 | 0.023344 | 2.201118 | 2.067940 | -0.059106 | 2.042181 | 0.032945 | 0.023344 | 2.201118 | -0.075020 | 284.14380 | 2.026775 | 10.799598 | 722.083772 | -12.985076 | -21.649717 | 3.804093 | 25.530249 | 5.186808e+08 | -2.111054 | 1.825885 | 0.569317 | -1.014906 | -0.478203 | 1.258107 | 1.0 | 451.70038 | False |

| 2017-01-31 01:59:42.112805 | 188999890.0 | 167058830.0 | 153385100.0 | 160276850.0 | 194781820.0 | 265345520.0 | 388861400.0 | 597449200.0 | 947348600.0 | 1.491336e+09 | 1.999952e+09 | 1.605463e+09 | 6.863398e+08 | 206139580.0 | 60198680.0 | 21560316.0 | 8027363.5 | 3163052.5 | 1069362.9 | 1155979.5 | 1205655.9 | 1227593.4 | 1093269.80 | 1310439.9 | 1257120.90 | 1626893.6 | 1687317.9 | 1547724.2 | 1889413.2 | 1736235.6 | 2222297.0 | 64.247070 | -114.180830 | -57.873524 | 0.079878 | -0.027051 | 49.837917 | 48.950280 | 0.507997 | -0.001615 | ... | 1173978.5 | 946332.50 | 46.211296 | -99.599365 | -137.597990 | -0.087668 | -0.111775 | 269.56824 | 291.43158 | 2.067940 | -0.059106 | 2.042181 | 0.032945 | 0.023344 | 2.201118 | 2.067940 | -0.059106 | 2.042181 | 0.032945 | 0.023344 | 2.201118 | -0.075020 | 284.14380 | 2.026775 | 10.799598 | 722.083772 | -15.436447 | 11.214994 | 4.809845 | 19.677261 | 3.081197e+08 | 2.513284 | 2.099205 | 1.721005 | -1.033757 | 1.900864 | 2.764742 | 1.0 | 205.54817 | False |

| 2017-01-31 01:59:46.612839 | 156668940.0 | 136574260.0 | 123834980.0 | 129313670.0 | 158046480.0 | 222452110.0 | 343151600.0 | 552882500.0 | 898671700.0 | 1.377260e+09 | 1.645098e+09 | 1.142623e+09 | 4.697292e+08 | 148775630.0 | 47467200.0 | 18703922.0 | 7264802.5 | 2508120.0 | 1085820.6 | 1155923.6 | 1167832.6 | 1042989.0 | 1352492.10 | 1176428.9 | 1383997.80 | 1534980.2 | 1675194.2 | 1783336.1 | 1992431.1 | 1171974.1 | 2034246.5 | 53.937880 | -147.811950 | -66.332436 | 0.031849 | 0.021172 | 48.725334 | 47.522090 | 0.415525 | -0.001351 | ... | 1173978.5 | 946332.50 | 46.211296 | -99.599365 | -137.597990 | -0.087668 | -0.111775 | 269.56824 | 291.43158 | 2.067940 | -0.059106 | 2.042181 | 0.032945 | 0.023344 | 2.201118 | 2.067940 | -0.059106 | 2.042181 | 0.032945 | 0.023344 | 2.201118 | -0.075020 | 284.14380 | 2.026775 | 10.799598 | 722.083772 | 9.732903 | -5.534757 | 21.615046 | 24.342825 | 4.715547e+08 | -0.517060 | 2.347574 | 2.787715 | -2.293623 | -2.082477 | 4.167586 | 1.0 | 136.35893 | False |

| 2017-01-31 01:59:51.112882 | 148339340.0 | 131617280.0 | 123383950.0 | 133689650.0 | 169121890.0 | 242394990.0 | 371708160.0 | 592586500.0 | 940750800.0 | 1.356828e+09 | 1.457272e+09 | 9.310631e+08 | 3.592990e+08 | 107206430.0 | 34867150.0 | 14601406.0 | 5957955.0 | 1804840.9 | 1102969.0 | 1155993.6 | 1165338.0 | 1181596.9 | 630540.94 | 1371131.6 | 1339956.00 | 1473016.6 | 1658352.9 | 1457381.9 | 1135691.6 | 2071354.1 | 2416306.0 | 52.308780 | -141.634200 | -85.162560 | -0.031770 | 0.035757 | 45.698080 | 44.961994 | 0.374102 | -0.003214 | ... | 1173978.5 | 946332.50 | 46.211296 | -99.599365 | -137.597990 | -0.087668 | -0.111775 | 269.56824 | 291.43158 | 2.067940 | -0.059106 | 2.042181 | 0.032945 | 0.023344 | 2.201118 | 2.067940 | -0.059106 | 2.042181 | 0.032945 | 0.023344 | 2.201118 | -0.075020 | 284.14380 | 2.026775 | 10.799598 | 722.083772 | 13.513677 | -6.461386 | 29.848564 | 33.396190 | 8.875320e+08 | -0.446005 | 3.369051 | 3.261839 | -4.248680 | -2.128106 | 5.763654 | 1.0 | 98.59849 | False |

| 2017-01-31 01:59:55.612917 | 135440930.0 | 119457550.0 | 111787400.0 | 121276030.0 | 155176540.0 | 227165100.0 | 355259520.0 | 570692000.0 | 891547700.0 | 1.239421e+09 | 1.285931e+09 | 8.412920e+08 | 3.517979e+08 | 116526360.0 | 41865636.0 | 18613406.0 | 8321585.5 | 3825070.0 | 1326246.5 | 1155995.0 | 1205672.4 | 1224776.4 | 1275854.50 | 1217636.2 | 838192.25 | 1057988.5 | 1786348.4 | 1358997.8 | 2065777.1 | 2144108.0 | 2296880.8 | 48.376137 | -109.131290 | -104.492080 | -0.023458 | -0.053285 | 46.801380 | 45.059790 | 0.350553 | -0.002084 | ... | 1173978.5 | 946332.50 | 46.211296 | -99.599365 | -137.597990 | -0.087668 | -0.111775 | 269.56824 | 291.43158 | 2.067940 | -0.059106 | 2.042181 | 0.032945 | 0.023344 | 2.201118 | 2.067940 | -0.059106 | 2.042181 | 0.032945 | 0.023344 | 2.201118 | -0.075020 | 284.14380 | 2.026775 | 10.799598 | 722.083772 | -9.631133 | 29.820732 | -9.856265 | 32.850887 | 8.587848e+08 | 1.883189 | 3.069619 | -0.251488 | 1.193291 | 0.494789 | 1.316057 | 1.0 | 431.81090 | False |

302188 rows × 124 columns

We save references to data’s index and column names for later use and additionally pop off the ground truths column. We will reattach the ground truths column after standardizing and interpolating the data.

index = mms_data.index

selections = mms_data.pop("selected")

column_names = mms_data.columns

Since there exists a possibility that the training contains missing data or data misreported by the MMS spacecraft (reported as either infinity or negative infinity), we need to fill in (interpolate) any missing data.

mms_data = mms_data.replace([np.inf, -np.inf], np.nan)

mms_data = mms_data.interpolate(method='time', limit_area='inside')

We normalize all features with standardization:

Where x̄ is the mean of the data, and σ is the standard deviation of the data.

Normalization ensures that the numerical values of all features of the data fall within a range from one to negative one and are centered around their mean (zero-mean and unit variance). Normalization improves the speed and performance of training neural networks as it unifies the scale by which differences in the data are represented without altering the data themselves.

scaler = preprocessing.StandardScaler()

mms_data = scaler.fit_transform(mms_data)

mms_data = pd.DataFrame(mms_data, index, column_names)

mms_data = mms_data.join(selections)

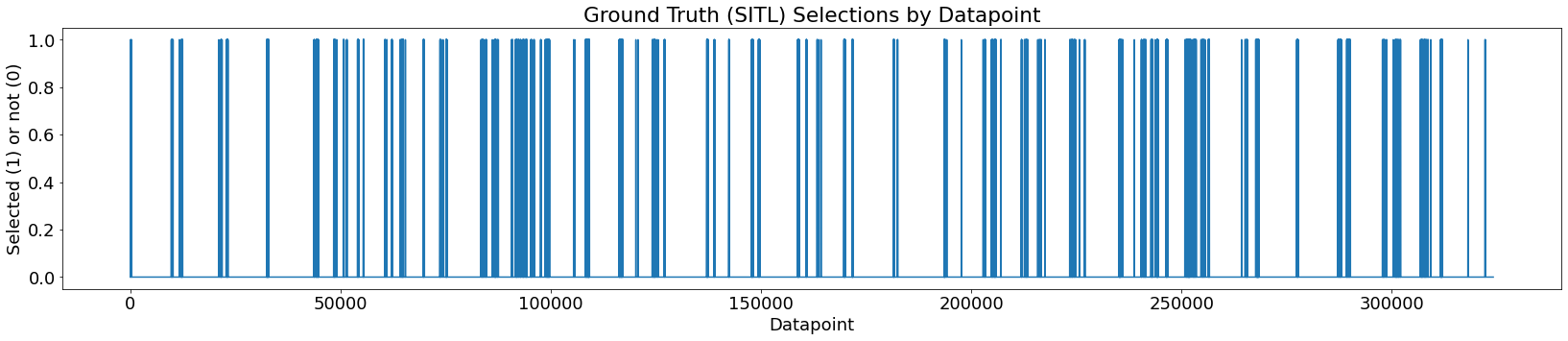

Next, we calculate class weights for our data classes (selected data points and non-selected data points). Since the distribution of our data is heavily skewed towards non-selected data points (just 1.9% of all data points in our training data were selected), it’s important to give the class of selected data points a higher weight when training. In fact, without establishing these class weights our model would quickly acheive 98% accuracy by naively leaving all data points unselected.

false_weight = len(mms_data)/(2*np.bincount(mms_data['selected'].values)[0])

true_weight = len(mms_data)/(2*np.bincount(mms_data['selected'].values)[1])

Our entire dataset is not contigous, and it contains time intervals with no observations. Therefore, we break it up into contigous chunks. We can do so by breaking up the data into the windows that the SITLs used to review the data.

sitl_windows = mms.mission_events('sroi', mms_data.index[0].to_pydatetime(), mms_data.index[-1].to_pydatetime(), sc='mms1')

windows = []

for start, end in zip(sitl_windows['tstart'], sitl_windows['tend']):

window = mms_data[start:end]

if not window.empty and len(window[window['selected']==True])>1:

windows.append(window)

windows

[ mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-01 01:49:31.236651 1.883959 ... False

2017-01-01 01:49:35.736674 1.458570 ... False

2017-01-01 01:49:40.236701 1.368072 ... False

2017-01-01 01:49:44.736723 1.338055 ... False

2017-01-01 01:49:49.236750 1.660276 ... False

... ... ... ...

2017-01-01 15:42:28.613021 -0.710802 ... False

2017-01-01 15:42:33.113061 -0.251731 ... False

2017-01-01 15:42:37.613092 -0.093185 ... False

2017-01-01 15:42:42.113131 -0.247850 ... False

2017-01-01 15:42:46.613161 -0.708025 ... False

[11111 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-02 01:39:35.994680 0.320164 ... False

2017-01-02 01:39:40.494716 0.196985 ... False

2017-01-02 01:39:44.994745 0.157529 ... False

2017-01-02 01:39:49.494779 0.300838 ... False

2017-01-02 01:39:53.994809 0.211870 ... False

... ... ... ...

2017-01-02 15:32:28.885849 -0.294389 ... False

2017-01-02 15:32:33.385891 -0.736511 ... False

2017-01-02 15:32:37.885924 -1.195971 ... False

2017-01-02 15:32:42.385967 -1.370307 ... False

2017-01-02 15:32:46.886000 -1.384843 ... False

[11110 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-03 01:34:28.067529 0.549291 ... False

2017-01-03 01:34:32.567559 0.407271 ... False

2017-01-03 01:34:37.067596 0.337537 ... False

2017-01-03 01:34:41.567624 0.476185 ... False

2017-01-03 01:34:46.067661 0.387942 ... False

... ... ... ...

2017-01-03 15:27:29.959758 2.154355 ... False

2017-01-03 15:27:34.459798 2.084295 ... False

2017-01-03 15:27:38.959830 2.215957 ... False

2017-01-03 15:27:43.459870 2.168310 ... False

2017-01-03 15:27:47.959902 2.102627 ... False

[11112 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-04 01:29:30.169168 0.265701 ... False

2017-01-04 01:29:34.669196 0.287085 ... False

2017-01-04 01:29:39.169233 0.482386 ... False

2017-01-04 01:29:43.669261 0.021679 ... False

2017-01-04 01:29:48.169297 -0.160651 ... False

... ... ... ...

2017-01-04 15:22:27.565195 -0.666403 ... False

2017-01-04 15:22:32.065236 -0.602717 ... False

2017-01-04 15:22:36.565269 -0.642503 ... False

2017-01-04 15:22:41.065311 -0.610725 ... False

2017-01-04 15:22:45.565345 -0.660693 ... False

[11111 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-05 01:19:35.672379 0.927498 ... False

2017-01-05 01:19:40.172417 0.804923 ... False

2017-01-05 01:19:44.672446 1.021925 ... False

2017-01-05 01:19:49.172483 0.780899 ... False

2017-01-05 01:19:53.672513 0.700766 ... False

... ... ... ...

2017-01-05 15:12:28.574822 0.022651 ... False

2017-01-05 15:12:33.074864 -0.033623 ... False

2017-01-05 15:12:37.574897 -0.141828 ... False

2017-01-05 15:12:42.074939 -0.203950 ... False

2017-01-05 15:12:46.574971 -0.221731 ... False

[9510 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-06 01:14:31.176632 -0.941931 ... False

2017-01-06 01:14:35.676662 0.299075 ... False

2017-01-06 01:14:40.176699 0.678641 ... False

2017-01-06 01:14:44.676728 0.428966 ... False

2017-01-06 01:14:49.176766 0.655676 ... False

... ... ... ...

2017-01-06 13:59:40.546992 -1.342667 ... True

2017-01-06 13:59:45.047032 -1.300301 ... True

2017-01-06 13:59:49.547066 -1.278993 ... True

2017-01-06 13:59:54.047106 -1.267142 ... True

2017-01-06 13:59:58.547140 -1.197250 ... True

[10207 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-07 02:00:02.850541 0.578615 ... False

2017-01-07 02:00:07.350580 0.330658 ... False

2017-01-07 02:00:11.850611 0.615171 ... False

2017-01-07 02:00:16.350650 0.575314 ... False

2017-01-07 02:00:20.850681 0.645435 ... False

... ... ... ...

2017-01-07 14:57:25.736533 0.171769 ... False

2017-01-07 14:57:30.236577 0.024648 ... False

2017-01-07 14:57:34.736611 0.036262 ... False

2017-01-07 14:57:39.236655 0.129640 ... False

2017-01-07 14:57:43.736689 0.209871 ... False

[10370 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-08 00:59:30.743854 -0.149322 ... False

2017-01-08 00:59:35.243893 0.051192 ... False

2017-01-08 00:59:39.743923 -0.109274 ... False

2017-01-08 00:59:44.243962 -0.134377 ... False

2017-01-08 00:59:48.743993 0.040231 ... False

... ... ... ...

2017-01-08 14:52:28.160111 -1.145365 ... False

2017-01-08 14:52:32.660144 -1.120129 ... False

2017-01-08 14:52:37.160187 -0.970623 ... False

2017-01-08 14:52:41.660221 -1.047322 ... False

2017-01-08 14:52:46.160263 -1.139481 ... False

[11111 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-09 00:54:34.297495 0.150986 ... False

2017-01-09 00:54:38.797525 0.054092 ... False

2017-01-09 00:54:43.297564 0.041586 ... False

2017-01-09 00:54:47.797595 0.211846 ... False

2017-01-09 00:54:52.297634 0.138488 ... False

... ... ... ...

2017-01-09 14:47:27.214472 -1.425762 ... False

2017-01-09 14:47:31.714506 -1.444290 ... False

2017-01-09 14:47:36.214549 -1.437870 ... False

2017-01-09 14:47:40.714583 -1.402435 ... False

2017-01-09 14:47:45.214625 -1.435300 ... False

[11110 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-10 00:49:30.129621 0.250380 ... False

2017-01-10 00:49:34.629653 0.356107 ... False

2017-01-10 00:49:39.129693 0.265523 ... False

2017-01-10 00:49:43.629726 0.210121 ... False

2017-01-10 00:49:48.129766 0.314735 ... False

... ... ... ...

2017-01-10 14:42:27.556663 -1.232397 ... False

2017-01-10 14:42:32.056708 -1.235161 ... False

2017-01-10 14:42:36.556742 -1.265290 ... False

2017-01-10 14:42:41.056784 -1.235246 ... False

2017-01-10 14:42:45.556820 -1.244504 ... False

[11111 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-11 00:44:31.554608 -0.438260 ... False

2017-01-11 00:44:36.054647 -0.247389 ... False

2017-01-11 00:44:40.554679 -0.360649 ... False

2017-01-11 00:44:45.054719 -0.461376 ... False

2017-01-11 00:44:49.554749 -0.250608 ... False

... ... ... ...

2017-01-11 12:39:34.919214 -1.374367 ... False

2017-01-11 12:39:39.419257 -1.388974 ... False

2017-01-11 12:39:43.919291 -1.387084 ... False

2017-01-11 12:39:48.419334 -1.379114 ... False

2017-01-11 12:39:52.919369 -1.375000 ... False

[9539 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-12 00:34:26.372263 0.282036 ... False

2017-01-12 00:34:30.872292 0.207331 ... False

2017-01-12 00:34:35.372328 0.135283 ... False

2017-01-12 00:34:39.872357 0.205012 ... False

2017-01-12 00:34:44.372392 0.200723 ... False

... ... ... ...

2017-01-12 14:27:37.628688 -1.174282 ... False

2017-01-12 14:27:42.128726 -1.222883 ... False

2017-01-12 14:27:46.628757 -1.191178 ... False

2017-01-12 14:27:51.128794 -1.202539 ... False

2017-01-12 14:27:55.628825 -1.169961 ... False

[9328 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-13 00:29:31.532187 0.433031 ... False

2017-01-13 00:29:36.032218 0.775808 ... False

2017-01-13 00:29:40.532246 0.681540 ... False

2017-01-13 00:29:45.032280 0.447294 ... False

2017-01-13 00:29:49.532306 0.495920 ... False

... ... ... ...

2017-01-13 14:22:37.896918 -1.122267 ... False

2017-01-13 14:22:42.396955 -1.095192 ... False

2017-01-13 14:22:46.896974 -1.078990 ... False

2017-01-13 14:22:51.397022 -1.045212 ... False

2017-01-13 14:22:55.897051 -1.078421 ... False

[11113 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-14 00:24:33.973502 0.516664 ... False

2017-01-14 00:24:38.473535 0.454663 ... False

2017-01-14 00:24:42.973562 0.536189 ... False

2017-01-14 00:24:47.473595 0.508872 ... False

2017-01-14 00:24:51.973623 0.595519 ... False

... ... ... ...

2017-01-14 14:17:35.850795 -1.048623 ... False

2017-01-14 14:17:40.350835 -1.060130 ... False

2017-01-14 14:17:44.850865 -1.046742 ... False

2017-01-14 14:17:49.350906 -1.101527 ... False

2017-01-14 14:17:53.850938 -1.069602 ... False

[11112 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-15 00:19:33.423590 0.124151 ... False

2017-01-15 00:19:37.923618 0.203979 ... False

2017-01-15 00:19:42.423652 0.194321 ... False

2017-01-15 00:19:46.923680 0.273831 ... False

2017-01-15 00:19:51.423716 0.097556 ... False

... ... ... ...

2017-01-15 14:12:35.302495 -1.251178 ... False

2017-01-15 14:12:39.802527 -1.217158 ... False

2017-01-15 14:12:44.302565 -1.277525 ... False

2017-01-15 14:12:48.802596 -1.249092 ... False

2017-01-15 14:12:53.302636 -1.251487 ... False

[11112 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-16 00:14:29.606218 -0.753529 ... False

2017-01-16 00:14:34.106254 -0.608870 ... False

2017-01-16 00:14:38.606282 -0.700074 ... False

2017-01-16 00:14:43.106318 -0.569566 ... False

2017-01-16 00:14:47.606346 -0.840524 ... False

... ... ... ...

2017-01-16 14:07:35.991104 -1.021327 ... False

2017-01-16 14:07:40.491143 -1.073761 ... False

2017-01-16 14:07:44.991175 -1.060275 ... False

2017-01-16 14:07:49.491213 -1.045543 ... False

2017-01-16 14:07:53.991245 -1.043030 ... False

[11113 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-17 00:09:33.097461 0.618697 ... False

2017-01-17 00:09:37.597490 -0.010759 ... False

2017-01-17 00:09:42.097524 -0.202842 ... False

2017-01-17 00:09:46.597553 0.128834 ... False

2017-01-17 00:09:51.097587 0.501555 ... False

... ... ... ...

2017-01-17 13:57:33.480536 -1.056261 ... False

2017-01-17 13:57:37.980569 -1.028945 ... False

2017-01-17 13:57:42.480608 -1.056892 ... False

2017-01-17 13:57:46.980639 -1.055822 ... False

2017-01-17 13:57:51.480679 -1.044705 ... False

[11045 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-17 23:59:30.257868 -0.460953 ... False

2017-01-17 23:59:34.757896 -0.477479 ... True

2017-01-17 23:59:39.257933 -0.540812 ... True

2017-01-17 23:59:43.757962 -0.410302 ... True

2017-01-17 23:59:48.257997 -0.714016 ... True

... ... ... ...

2017-01-18 13:47:35.148867 -0.351026 ... False

2017-01-18 13:47:39.648900 0.028805 ... False

2017-01-18 13:47:44.148942 -0.060379 ... False

2017-01-18 13:47:48.648976 -0.036331 ... False

2017-01-18 13:47:53.149017 0.230107 ... False

[11046 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-18 23:54:30.109686 -1.077935 ... False

2017-01-18 23:54:34.609716 -0.473158 ... False

2017-01-18 23:54:39.109751 -0.668999 ... False

2017-01-18 23:54:43.609781 -0.616445 ... False

2017-01-18 23:54:48.109818 -1.026126 ... False

... ... ... ...

2017-01-19 13:42:35.005498 -0.561375 ... False

2017-01-19 13:42:39.505531 -0.582718 ... False

2017-01-19 13:42:44.005572 -0.569202 ... False

2017-01-19 13:42:48.505605 -0.630146 ... False

2017-01-19 13:42:53.005646 -0.643737 ... False

[11046 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-19 23:54:32.495962 0.887217 ... False

2017-01-19 23:54:36.995991 1.040867 ... False

2017-01-19 23:54:41.496029 0.968065 ... False

2017-01-19 23:54:45.996058 0.874947 ... False

2017-01-19 23:54:50.496094 0.996150 ... False

... ... ... ...

2017-01-20 13:42:32.893654 -1.341355 ... False

2017-01-20 13:42:37.393695 -1.340042 ... False

2017-01-20 13:42:41.893728 -1.315527 ... False

2017-01-20 13:42:46.393768 -1.372444 ... False

2017-01-20 13:42:50.893801 -1.342465 ... False

[11045 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-20 23:44:28.879445 0.258103 ... False

2017-01-20 23:44:33.379480 0.165344 ... False

2017-01-20 23:44:37.879512 0.238723 ... False

2017-01-20 23:44:42.379549 0.240764 ... False

2017-01-20 23:44:46.879579 0.325817 ... False

... ... ... ...

2017-01-21 13:32:33.783922 -1.281203 ... False

2017-01-21 13:32:38.283965 -1.240908 ... False

2017-01-21 13:32:42.783999 -1.245359 ... False

2017-01-21 13:32:47.284043 -1.222616 ... False

2017-01-21 13:32:51.784076 -1.244364 ... False

[11046 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-21 23:39:32.397247 0.648462 ... False

2017-01-21 23:39:36.897277 0.125554 ... False

2017-01-21 23:39:41.397315 0.644362 ... False

2017-01-21 23:39:45.897345 0.794314 ... False

2017-01-21 23:39:50.397383 0.748639 ... False

... ... ... ...

2017-01-22 13:27:32.804047 -1.281105 ... False

2017-01-22 13:27:37.304090 -1.316070 ... False

2017-01-22 13:27:41.804122 -1.296095 ... False

2017-01-22 13:27:46.304166 -1.274427 ... False

2017-01-22 13:27:50.804198 -1.259403 ... False

[11045 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-22 23:34:32.497506 0.371171 ... False

2017-01-22 23:34:36.997536 0.306008 ... False

2017-01-22 23:34:41.497574 0.222378 ... False

2017-01-22 23:34:45.997588 0.303669 ... False

2017-01-22 23:34:50.497642 0.300867 ... False

... ... ... ...

2017-01-23 13:22:37.407060 -1.084231 ... False

2017-01-23 13:22:41.907095 -1.132926 ... False

2017-01-23 13:22:46.407137 -1.138529 ... False

2017-01-23 13:22:50.907171 -1.112983 ... False

2017-01-23 13:22:55.407213 -1.114196 ... False

[11046 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-23 23:30:17.579249 0.625622 ... False

2017-01-23 23:30:22.079286 0.446295 ... False

2017-01-23 23:30:26.579317 0.340665 ... False

2017-01-23 23:30:31.079355 0.494095 ... False

2017-01-23 23:30:35.579386 0.285995 ... False

... ... ... ...

2017-01-24 08:16:56.833894 0.304322 ... False

2017-01-24 08:17:01.333935 0.400155 ... False

2017-01-24 08:17:05.833968 0.147983 ... False

2017-01-24 08:17:10.334009 0.348916 ... False

2017-01-24 08:17:14.834041 0.285743 ... False

[7026 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-24 23:25:19.951727 0.397294 ... False

2017-01-24 23:25:24.451768 0.536392 ... False

2017-01-24 23:25:28.951798 0.343806 ... False

2017-01-24 23:25:33.451838 0.284095 ... False

2017-01-24 23:25:37.951870 0.483337 ... False

... ... ... ...

2017-01-25 13:06:44.364210 -1.271923 ... False

2017-01-25 13:06:48.864244 -1.243242 ... False

2017-01-25 13:06:53.364289 -1.241685 ... False

2017-01-25 13:06:57.864325 -1.216165 ... False

2017-01-25 13:07:02.364367 -1.243583 ... False

[10957 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-25 23:20:21.915845 0.629981 ... False

2017-01-25 23:20:26.415884 0.712099 ... False

2017-01-25 23:20:30.915915 0.690743 ... False

2017-01-25 23:20:35.415953 0.771954 ... False

2017-01-25 23:20:39.915985 0.598590 ... False

... ... ... ...

2017-01-26 13:01:41.834981 1.751733 ... False

2017-01-26 13:01:46.335025 1.385139 ... False

2017-01-26 13:01:50.835061 1.505051 ... False

2017-01-26 13:01:55.335105 2.003533 ... False

2017-01-26 13:01:59.835140 2.122904 ... False

[10956 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-26 23:15:17.804388 1.620798 ... False

2017-01-26 23:15:22.304427 1.499084 ... False

2017-01-26 23:15:26.804459 1.292690 ... False

2017-01-26 23:15:31.304499 1.313568 ... False

2017-01-26 23:15:35.804530 1.579847 ... False

... ... ... ...

2017-01-27 12:56:46.723774 -1.481024 ... False

2017-01-27 12:56:51.223817 -1.474493 ... False

2017-01-27 12:56:55.723852 -1.458199 ... False

2017-01-27 12:57:00.223895 -1.479174 ... False

2017-01-27 12:57:04.723930 -1.497171 ... False

[10957 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-27 23:05:17.911570 0.274722 ... False

2017-01-27 23:05:22.411609 0.108195 ... False

2017-01-27 23:05:26.911641 0.005769 ... False

2017-01-27 23:05:31.411680 -0.030007 ... False

2017-01-27 23:05:35.911711 -0.060532 ... False

... ... ... ...

2017-01-28 12:46:42.333037 -1.247610 ... False

2017-01-28 12:46:46.833072 -1.237887 ... False

2017-01-28 12:46:51.333117 -1.219926 ... False

2017-01-28 12:46:55.833153 -1.233068 ... False

2017-01-28 12:47:00.333198 -1.255480 ... False

[10957 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-28 23:00:18.906511 0.274405 ... False

2017-01-28 23:00:23.406551 0.333638 ... False

2017-01-28 23:00:27.906583 0.324077 ... False

2017-01-28 23:00:32.406623 0.413629 ... False

2017-01-28 23:00:36.906655 0.240758 ... False

... ... ... ...

2017-01-29 12:41:43.330800 -1.292784 ... False

2017-01-29 12:41:47.830835 -1.283827 ... False

2017-01-29 12:41:52.330878 -1.331312 ... False

2017-01-29 12:41:56.830914 -1.298229 ... False

2017-01-29 12:42:01.330958 -1.317063 ... False

[10957 rows x 124 columns],

mms1_des_energyspectr_omni_fast_0 ... selected

Epoch ...

2017-01-29 23:00:22.371527 0.205416 ... False

2017-01-29 23:00:26.871558 0.070299 ... False

2017-01-29 23:00:31.371597 0.251162 ... False

2017-01-29 23:00:35.871629 0.143420 ... False

2017-01-29 23:00:40.371669 0.299162 ... False

... ... ... ...

2017-01-30 12:41:37.792835 3.766927 ... False

2017-01-30 12:41:42.292879 3.941938 ... False

2017-01-30 12:41:46.792914 4.024080 ... False

2017-01-30 12:41:51.292959 4.471779 ... False

2017-01-30 12:41:55.792994 4.125512 ... False

[10954 rows x 124 columns]]

Finally, we break up our data into individual sequences that will be fed to our neural network.

We define a SEQ_LEN variable that will determine the length of our sequences. This variable will also be passed to our network so that it knows how long of a data sequence to expect while training. The choice of sequence length is largely arbitrary.

SEQ_LEN = 250

For each window, we assemble two sequences: an X_sequence containing individual data points from our training data and a y_sequence containing the truth values for those data points (whether or not those data points were selected by a SITL).

We add those sequences to four collections: X_train and y_train containing X_sequences and y_sequences for our training data and X_test and y_test containing X_sequences and y_sequences for our testing data. We allocate 80% of the sequences to trainining and the remaining 20% to testing.

while True:

X_train, X_test, y_train, y_test = [], [], [], []

sequences = []

for i in range(len(windows)):

X_sequence = []

y_sequence = []

if random.random() < 0.6:

for value in windows[i].values:

X_sequence.append(value[:-1])

y_sequence.append(value[-1])

if len(X_sequence) == SEQ_LEN:

X_train.append(X_sequence.copy())

y_train.append(y_sequence.copy())

X_sequence = []

y_sequence = []

else:

for value in windows[i].values:

X_sequence.append(value[:-1])

y_sequence.append(value[-1])

if len(X_sequence) == SEQ_LEN:

X_test.append(X_sequence.copy())

y_test.append(y_sequence.copy())

X_sequence = []

y_sequence = []

X_train = np.array(X_train)

X_test = np.array(X_test)

y_train = np.expand_dims(np.array(y_train), axis=2)

y_test = np.expand_dims(np.array(y_test), axis=2)

if len(X_train) > len(X_test):

break

We can see how many sequences of data we have for training and testing, respectively:

print(f"Number of sequences in training data: {len(X_train)}")

print(f"Number of sequences in test data: {len(X_test)}")

Number of sequences in training data: 753

Number of sequences in test data: 519

Define and Train LSTM¶

Now that we have processed our data into our training and test sets, we can begin to build and train and our LSTM.

First, we need to define a custom F1 score and weighted binary crossentropy functions.

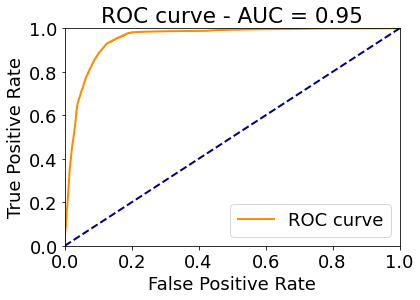

An F1 score is a measure of a model’s accuracy, calculated as a balance of the model’s precision (the number of true positives predicted by the model divided by the total number of positives predicted by the model) and recall (the number of true positives predicted by the model divided by the number of actual positives in the data):

We will evaluate our model using the F1 score since we want to strike a balance between the model’s precision and recall. Remember, we cannot use true accuracy (the number of true positives and true negatives divided by the number of data points in the data) because of the imbalance between our classes.

# (Credit: Paddy and Kev1n91 from https://stackoverflow.com/a/45305384/3988976)

def f1(y_true, y_pred):

def recall(y_true, y_pred):

"""Recall metric.

Only computes a batch-wise average of recall.

Computes the recall, a metric for multi-label classification of

how many relevant items are selected.

"""

true_positives = K.sum(K.round(K.clip(y_true * y_pred, 0, 1)))

possible_positives = K.sum(K.round(K.clip(y_true, 0, 1)))

recall = true_positives / (possible_positives + K.epsilon())

return recall

def precision(y_true, y_pred):

"""Precision metric.

Only computes a batch-wise average of precision.

Computes the precision, a metric for multi-label classification of

how many selected items are relevant.

"""

true_positives = K.sum(K.round(K.clip(y_true * y_pred, 0, 1)))

predicted_positives = K.sum(K.round(K.clip(y_pred, 0, 1)))

precision = true_positives / (predicted_positives + K.epsilon())

return precision

precision = precision(y_true, y_pred)

recall = recall(y_true, y_pred)

return 2*((precision*recall)/(precision+recall+K.epsilon()))

Cross-entropy is a function used to determine the loss between a set of predictions and their truth values. The larger the difference between a prediction and its true value, the larger the loss will be. In general, many machine learning architectures (including our LSTM) are designed to minimize their given loss function. A perfect model will have a loss of 0.

Binary cross-entropy is used when we only have two classes (in our case, selected or not selected) and weighted binary cross-entropy allows us to assign a weight to one of the classes. This weight can effectively increase or decrease the loss of that class. In our case, we have previously defined a variable true_weight to be the class weight for positive (selected) datapoints. We will pass that weight into the function.

This cross-entropy function will be passed in to our model as our loss function.

(Because the loss function of a model needs to be differentiable to perform gradient descent, we cannot use our F1 score as our loss function.)

# (Credit: tobigue from https://stackoverflow.com/questions/42158866/neural-network-for-multi-label-classification-with-large-number-of-classes-outpu)

def weighted_binary_crossentropy(target, output):

"""

Weighted binary crossentropy between an output tensor

and a target tensor. POS_WEIGHT is used as a multiplier

for the positive targets.

Combination of the following functions:

* keras.losses.binary_crossentropy

* keras.backend.tensorflow_backend.binary_crossentropy

* tf.nn.weighted_cross_entropy_with_logits

"""

# transform back to logits

_epsilon = tfb._to_tensor(tfb.epsilon(), output.dtype.base_dtype)

output = tf.clip_by_value(output, _epsilon, 1 - _epsilon)

output = tf.log(output / (1 - output))

# compute weighted loss

loss = tf.nn.weighted_cross_entropy_with_logits(targets=target,

logits=output,

pos_weight=true_weight)

return tf.reduce_mean(loss, axis=-1)

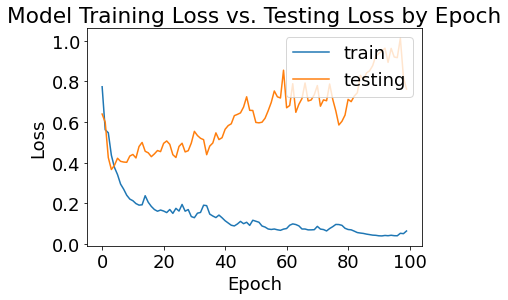

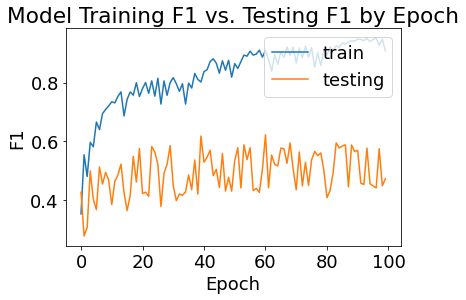

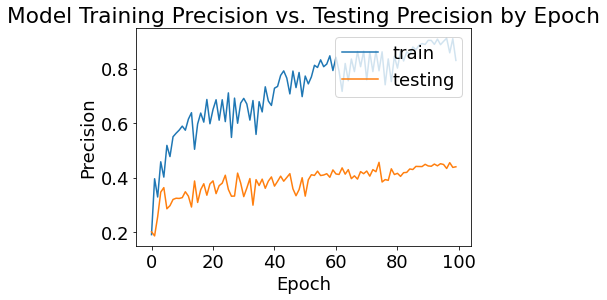

Before building our LSTM, we define several hyperparameters that will define how the model is trained:

EPOCHS: The number of times the model trains through our entire dataset

BATCH_SIZE: The number of sequences that our model trains using at any given point

LAYER_SIZE: The number of LSTM internal to each layer of the model.

Choices for these hyperparameters are largely arbitrary and can be altered to tune our LSTM.

EPOCHS = 100

BATCH_SIZE = 128

LAYER_SIZE = 300

We now define our LSTM.

For this version of the model, we two bidirectional LSTM layers, two dropout layers, and one time distributed dense layer.

Internally, an LSTM layer uses a for loop to iterate over the timesteps of a sequence, while maintaining states that encode information from those timesteps. Using these internal states, the LSTM learns the characteristics of our data (the X_sequences we defined earlier) and how those data relate to our expected output (the y_sequences we defined earlier). Normal (unidirectional) LSTMs only encode information from prior-seen timesteps. Bidirectional LSTMs can can encode information prior to and after a given timestep.

With the addition of a dense layer, the LSTM will output a value between 0 and 1 that corresponds to the model’s certainty about whether or not a timestep was selected by the SITL.

model_name = f"{SEQ_LEN}-SEQ_LEN-{BATCH_SIZE}-BATCH_SIZE-{LAYER_SIZE}-LAYER_SIZE-{int(time.time())}"

model = Sequential()

model.add(Bidirectional(LSTM(LAYER_SIZE, return_sequences=True), input_shape=(None, X_train.shape[2])))

model.add(Dropout(0.4))

model.add(Bidirectional(LSTM(LAYER_SIZE, return_sequences=True), input_shape=(None, X_train.shape[2])))

model.add(Dropout(0.4))

model.add(TimeDistributed(Dense(1, activation='sigmoid')))

opt = tf.keras.optimizers.Adam()

model.compile(loss=weighted_binary_crossentropy,

optimizer=opt,

metrics=['accuracy', f1, tf.keras.metrics.Precision()])

WARNING:tensorflow:From /tensorflow-1.15.2/python3.6/tensorflow_core/python/ops/init_ops.py:97: calling GlorotUniform.__init__ (from tensorflow.python.ops.init_ops) with dtype is deprecated and will be removed in a future version.

Instructions for updating:

Call initializer instance with the dtype argument instead of passing it to the constructor

WARNING:tensorflow:From /tensorflow-1.15.2/python3.6/tensorflow_core/python/ops/init_ops.py:97: calling Orthogonal.__init__ (from tensorflow.python.ops.init_ops) with dtype is deprecated and will be removed in a future version.

Instructions for updating:

Call initializer instance with the dtype argument instead of passing it to the constructor

WARNING:tensorflow:From /tensorflow-1.15.2/python3.6/tensorflow_core/python/ops/init_ops.py:97: calling Zeros.__init__ (from tensorflow.python.ops.init_ops) with dtype is deprecated and will be removed in a future version.

Instructions for updating:

Call initializer instance with the dtype argument instead of passing it to the constructor

WARNING:tensorflow:From /tensorflow-1.15.2/python3.6/tensorflow_core/python/ops/resource_variable_ops.py:1630: calling BaseResourceVariable.__init__ (from tensorflow.python.ops.resource_variable_ops) with constraint is deprecated and will be removed in a future version.

Instructions for updating:

If using Keras pass *_constraint arguments to layers.

WARNING:tensorflow:From <ipython-input-16-f6bf984e786f>:20: calling weighted_cross_entropy_with_logits (from tensorflow.python.ops.nn_impl) with targets is deprecated and will be removed in a future version.

Instructions for updating:

targets is deprecated, use labels instead

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

bidirectional (Bidirectional (None, None, 600) 1017600

_________________________________________________________________

dropout (Dropout) (None, None, 600) 0

_________________________________________________________________

bidirectional_1 (Bidirection (None, None, 600) 2162400

_________________________________________________________________

dropout_1 (Dropout) (None, None, 600) 0

_________________________________________________________________

time_distributed (TimeDistri (None, None, 1) 601

=================================================================

Total params: 3,180,601

Trainable params: 3,180,601

Non-trainable params: 0

_________________________________________________________________

We set our training process to save the best versions of our model according to the previously defined F1 score. Each epoch, if a version of the model is trained with a higher F1 score than the previous best, the model saved on disk will be overwritten with the current best model.

filepath = "mp-dl-unh"

checkpoint = ModelCheckpoint(filepath, monitor='val_f1', verbose=1, save_best_only=True, mode='max')

The following will train the model and save the training history for later visualization.

history = model.fit(

x=X_train, y=y_train,

batch_size=BATCH_SIZE,

epochs=EPOCHS,

validation_data=(X_test, y_test),

callbacks=[checkpoint],

verbose=1,

shuffle=False

)

WARNING:tensorflow:From /tensorflow-1.15.2/python3.6/tensorflow_core/python/ops/math_grad.py:1424: where (from tensorflow.python.ops.array_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Use tf.where in 2.0, which has the same broadcast rule as np.where

Train on 753 samples, validate on 519 samples

Epoch 1/100

640/753 [========================>.....] - ETA: 1s - loss: 0.8397 - acc: 0.6974 - f1: 0.3451 - precision: 0.1849

Epoch 00001: val_f1 improved from -inf to 0.42757, saving model to mp-dl-unh

753/753 [==============================] - 15s 19ms/sample - loss: 0.7737 - acc: 0.7232 - f1: 0.3541 - precision: 0.1909 - val_loss: 0.6396 - val_acc: 0.8299 - val_f1: 0.4276 - val_precision: 0.2014

Epoch 2/100

640/753 [========================>.....] - ETA: 1s - loss: 0.6136 - acc: 0.8747 - f1: 0.5461 - precision: 0.3894

Epoch 00002: val_f1 did not improve from 0.42757

753/753 [==============================] - 11s 14ms/sample - loss: 0.5620 - acc: 0.8849 - f1: 0.5549 - precision: 0.3958 - val_loss: 0.5994 - val_acc: 0.8189 - val_f1: 0.2793 - val_precision: 0.1862

Epoch 3/100

640/753 [========================>.....] - ETA: 1s - loss: 0.5949 - acc: 0.8367 - f1: 0.4756 - precision: 0.3271

Epoch 00003: val_f1 did not improve from 0.42757

753/753 [==============================] - 11s 14ms/sample - loss: 0.5476 - acc: 0.8467 - f1: 0.4810 - precision: 0.3289 - val_loss: 0.4256 - val_acc: 0.8731 - val_f1: 0.3077 - val_precision: 0.2567

Epoch 4/100

640/753 [========================>.....] - ETA: 1s - loss: 0.4788 - acc: 0.8978 - f1: 0.5785 - precision: 0.4482

Epoch 00004: val_f1 improved from 0.42757 to 0.50001, saving model to mp-dl-unh

753/753 [==============================] - 11s 14ms/sample - loss: 0.4369 - acc: 0.9070 - f1: 0.5971 - precision: 0.4580 - val_loss: 0.3668 - val_acc: 0.9128 - val_f1: 0.5000 - val_precision: 0.3469

Epoch 5/100

640/753 [========================>.....] - ETA: 1s - loss: 0.4156 - acc: 0.8689 - f1: 0.5636 - precision: 0.3923

Epoch 00005: val_f1 did not improve from 0.50001

753/753 [==============================] - 11s 14ms/sample - loss: 0.3772 - acc: 0.8815 - f1: 0.5819 - precision: 0.4019 - val_loss: 0.3872 - val_acc: 0.9183 - val_f1: 0.4034 - val_precision: 0.3632

Epoch 6/100

640/753 [========================>.....] - ETA: 1s - loss: 0.3745 - acc: 0.9192 - f1: 0.6637 - precision: 0.5168

Epoch 00006: val_f1 did not improve from 0.50001

753/753 [==============================] - 11s 14ms/sample - loss: 0.3420 - acc: 0.9244 - f1: 0.6662 - precision: 0.5181 - val_loss: 0.4222 - val_acc: 0.8848 - val_f1: 0.3684 - val_precision: 0.2857

Epoch 7/100

640/753 [========================>.....] - ETA: 1s - loss: 0.3198 - acc: 0.9050 - f1: 0.6347 - precision: 0.4735

Epoch 00007: val_f1 improved from 0.50001 to 0.51366, saving model to mp-dl-unh

753/753 [==============================] - 11s 14ms/sample - loss: 0.2950 - acc: 0.9119 - f1: 0.6405 - precision: 0.4771 - val_loss: 0.4070 - val_acc: 0.8908 - val_f1: 0.5137 - val_precision: 0.2971

Epoch 8/100

640/753 [========================>.....] - ETA: 1s - loss: 0.2917 - acc: 0.9302 - f1: 0.6997 - precision: 0.5554

Epoch 00008: val_f1 did not improve from 0.51366

753/753 [==============================] - 11s 14ms/sample - loss: 0.2700 - acc: 0.9330 - f1: 0.6947 - precision: 0.5498 - val_loss: 0.4036 - val_acc: 0.9009 - val_f1: 0.4554 - val_precision: 0.3199

Epoch 9/100

640/753 [========================>.....] - ETA: 1s - loss: 0.2607 - acc: 0.9331 - f1: 0.7108 - precision: 0.5656

Epoch 00009: val_f1 did not improve from 0.51366

753/753 [==============================] - 11s 14ms/sample - loss: 0.2403 - acc: 0.9365 - f1: 0.7086 - precision: 0.5627 - val_loss: 0.4023 - val_acc: 0.9023 - val_f1: 0.4948 - val_precision: 0.3243

Epoch 10/100

640/753 [========================>.....] - ETA: 1s - loss: 0.2405 - acc: 0.9361 - f1: 0.7232 - precision: 0.5768

Epoch 00010: val_f1 did not improve from 0.51366

753/753 [==============================] - 11s 14ms/sample - loss: 0.2214 - acc: 0.9394 - f1: 0.7211 - precision: 0.5741 - val_loss: 0.4330 - val_acc: 0.9020 - val_f1: 0.4683 - val_precision: 0.3236

Epoch 11/100

640/753 [========================>.....] - ETA: 1s - loss: 0.2338 - acc: 0.9385 - f1: 0.7312 - precision: 0.5870

Epoch 00011: val_f1 did not improve from 0.51366

753/753 [==============================] - 11s 14ms/sample - loss: 0.2133 - acc: 0.9426 - f1: 0.7347 - precision: 0.5887 - val_loss: 0.4408 - val_acc: 0.9032 - val_f1: 0.3857 - val_precision: 0.3265

Epoch 12/100

640/753 [========================>.....] - ETA: 1s - loss: 0.2178 - acc: 0.9344 - f1: 0.7238 - precision: 0.5687

Epoch 00012: val_f1 did not improve from 0.51366

753/753 [==============================] - 11s 14ms/sample - loss: 0.1993 - acc: 0.9396 - f1: 0.7310 - precision: 0.5738 - val_loss: 0.4235 - val_acc: 0.9129 - val_f1: 0.4649 - val_precision: 0.3482

Epoch 13/100

640/753 [========================>.....] - ETA: 1s - loss: 0.2071 - acc: 0.9458 - f1: 0.7570 - precision: 0.6187

Epoch 00013: val_f1 did not improve from 0.51366

753/753 [==============================] - 11s 14ms/sample - loss: 0.1917 - acc: 0.9483 - f1: 0.7531 - precision: 0.6145 - val_loss: 0.4799 - val_acc: 0.9062 - val_f1: 0.4858 - val_precision: 0.3319

Epoch 14/100

640/753 [========================>.....] - ETA: 1s - loss: 0.2104 - acc: 0.9504 - f1: 0.7719 - precision: 0.6420

Epoch 00014: val_f1 improved from 0.51366 to 0.52297, saving model to mp-dl-unh

753/753 [==============================] - 11s 14ms/sample - loss: 0.1932 - acc: 0.9527 - f1: 0.7684 - precision: 0.6379 - val_loss: 0.5000 - val_acc: 0.8869 - val_f1: 0.5230 - val_precision: 0.2922

Epoch 15/100

640/753 [========================>.....] - ETA: 1s - loss: 0.2647 - acc: 0.9105 - f1: 0.6608 - precision: 0.4893

Epoch 00015: val_f1 did not improve from 0.52297

753/753 [==============================] - 10s 14ms/sample - loss: 0.2380 - acc: 0.9205 - f1: 0.6866 - precision: 0.5039 - val_loss: 0.4565 - val_acc: 0.9263 - val_f1: 0.4257 - val_precision: 0.3876

Epoch 16/100

640/753 [========================>.....] - ETA: 1s - loss: 0.2233 - acc: 0.9420 - f1: 0.7482 - precision: 0.6011

Epoch 00016: val_f1 did not improve from 0.52297

753/753 [==============================] - 11s 14ms/sample - loss: 0.2056 - acc: 0.9446 - f1: 0.7432 - precision: 0.5968 - val_loss: 0.4489 - val_acc: 0.8952 - val_f1: 0.3653 - val_precision: 0.3088

Epoch 17/100

640/753 [========================>.....] - ETA: 1s - loss: 0.2032 - acc: 0.9488 - f1: 0.7627 - precision: 0.6338

Epoch 00017: val_f1 did not improve from 0.52297

753/753 [==============================] - 11s 14ms/sample - loss: 0.1852 - acc: 0.9525 - f1: 0.7681 - precision: 0.6367 - val_loss: 0.4300 - val_acc: 0.9151 - val_f1: 0.4169 - val_precision: 0.3557

Epoch 18/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1870 - acc: 0.9412 - f1: 0.7454 - precision: 0.5952

Epoch 00018: val_f1 improved from 0.52297 to 0.54961, saving model to mp-dl-unh

753/753 [==============================] - 11s 14ms/sample - loss: 0.1700 - acc: 0.9465 - f1: 0.7572 - precision: 0.6034 - val_loss: 0.4437 - val_acc: 0.9228 - val_f1: 0.5496 - val_precision: 0.3775

Epoch 19/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1757 - acc: 0.9597 - f1: 0.8024 - precision: 0.6911

Epoch 00019: val_f1 did not improve from 0.54961

753/753 [==============================] - 11s 14ms/sample - loss: 0.1617 - acc: 0.9615 - f1: 0.7995 - precision: 0.6864 - val_loss: 0.4601 - val_acc: 0.9069 - val_f1: 0.4623 - val_precision: 0.3355

Epoch 20/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1771 - acc: 0.9395 - f1: 0.7388 - precision: 0.5875

Epoch 00020: val_f1 improved from 0.54961 to 0.57562, saving model to mp-dl-unh

753/753 [==============================] - 11s 14ms/sample - loss: 0.1675 - acc: 0.9453 - f1: 0.7526 - precision: 0.5974 - val_loss: 0.4548 - val_acc: 0.9226 - val_f1: 0.5756 - val_precision: 0.3766

Epoch 21/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1746 - acc: 0.9539 - f1: 0.7852 - precision: 0.6552

Epoch 00021: val_f1 did not improve from 0.57562

753/753 [==============================] - 11s 14ms/sample - loss: 0.1626 - acc: 0.9557 - f1: 0.7794 - precision: 0.6496 - val_loss: 0.4955 - val_acc: 0.9261 - val_f1: 0.4233 - val_precision: 0.3881

Epoch 22/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1686 - acc: 0.9592 - f1: 0.8006 - precision: 0.6878

Epoch 00022: val_f1 did not improve from 0.57562

753/753 [==============================] - 11s 14ms/sample - loss: 0.1551 - acc: 0.9614 - f1: 0.8001 - precision: 0.6854 - val_loss: 0.5072 - val_acc: 0.9091 - val_f1: 0.4278 - val_precision: 0.3412

Epoch 23/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1871 - acc: 0.9423 - f1: 0.7492 - precision: 0.6002

Epoch 00023: val_f1 did not improve from 0.57562

753/753 [==============================] - 11s 14ms/sample - loss: 0.1701 - acc: 0.9480 - f1: 0.7638 - precision: 0.6105 - val_loss: 0.4906 - val_acc: 0.9208 - val_f1: 0.4136 - val_precision: 0.3704

Epoch 24/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1611 - acc: 0.9591 - f1: 0.8048 - precision: 0.6841

Epoch 00024: val_f1 improved from 0.57562 to 0.58272, saving model to mp-dl-unh

753/753 [==============================] - 11s 14ms/sample - loss: 0.1511 - acc: 0.9617 - f1: 0.8058 - precision: 0.6854 - val_loss: 0.4396 - val_acc: 0.9231 - val_f1: 0.5827 - val_precision: 0.3796

Epoch 25/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1908 - acc: 0.9429 - f1: 0.7511 - precision: 0.6034

Epoch 00025: val_f1 did not improve from 0.58272

753/753 [==============================] - 11s 14ms/sample - loss: 0.1759 - acc: 0.9468 - f1: 0.7537 - precision: 0.6052 - val_loss: 0.4258 - val_acc: 0.9310 - val_f1: 0.5641 - val_precision: 0.4088

Epoch 26/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1779 - acc: 0.9626 - f1: 0.8137 - precision: 0.7120

Epoch 00026: val_f1 did not improve from 0.58272

753/753 [==============================] - 10s 14ms/sample - loss: 0.1625 - acc: 0.9649 - f1: 0.8147 - precision: 0.7107 - val_loss: 0.4802 - val_acc: 0.9150 - val_f1: 0.5226 - val_precision: 0.3566

Epoch 27/100

640/753 [========================>.....] - ETA: 1s - loss: 0.2158 - acc: 0.9254 - f1: 0.7089 - precision: 0.5350

Epoch 00027: val_f1 did not improve from 0.58272

753/753 [==============================] - 11s 14ms/sample - loss: 0.1950 - acc: 0.9332 - f1: 0.7270 - precision: 0.5473 - val_loss: 0.4961 - val_acc: 0.9092 - val_f1: 0.3794 - val_precision: 0.3318

Epoch 28/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1749 - acc: 0.9619 - f1: 0.8177 - precision: 0.7044

Epoch 00028: val_f1 did not improve from 0.58272

753/753 [==============================] - 11s 14ms/sample - loss: 0.1619 - acc: 0.9623 - f1: 0.8055 - precision: 0.6914 - val_loss: 0.4533 - val_acc: 0.9053 - val_f1: 0.4927 - val_precision: 0.3323

Epoch 29/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1875 - acc: 0.9393 - f1: 0.7402 - precision: 0.5881

Epoch 00029: val_f1 did not improve from 0.58272

753/753 [==============================] - 11s 14ms/sample - loss: 0.1698 - acc: 0.9455 - f1: 0.7568 - precision: 0.5993 - val_loss: 0.4593 - val_acc: 0.9332 - val_f1: 0.5228 - val_precision: 0.4165

Epoch 30/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1464 - acc: 0.9578 - f1: 0.8005 - precision: 0.6740

Epoch 00030: val_f1 improved from 0.58272 to 0.58565, saving model to mp-dl-unh

753/753 [==============================] - 11s 14ms/sample - loss: 0.1355 - acc: 0.9602 - f1: 0.8003 - precision: 0.6732 - val_loss: 0.4969 - val_acc: 0.9232 - val_f1: 0.5856 - val_precision: 0.3801

Epoch 31/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1404 - acc: 0.9606 - f1: 0.8147 - precision: 0.6887

Epoch 00031: val_f1 did not improve from 0.58565

753/753 [==============================] - 10s 14ms/sample - loss: 0.1298 - acc: 0.9633 - f1: 0.8167 - precision: 0.6905 - val_loss: 0.5545 - val_acc: 0.9055 - val_f1: 0.4497 - val_precision: 0.3303

Epoch 32/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1644 - acc: 0.9551 - f1: 0.7862 - precision: 0.6627

Epoch 00032: val_f1 did not improve from 0.58565

753/753 [==============================] - 10s 14ms/sample - loss: 0.1520 - acc: 0.9591 - f1: 0.7960 - precision: 0.6699 - val_loss: 0.5339 - val_acc: 0.9174 - val_f1: 0.3990 - val_precision: 0.3617

Epoch 33/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1696 - acc: 0.9425 - f1: 0.7557 - precision: 0.6000

Epoch 00033: val_f1 did not improve from 0.58565

753/753 [==============================] - 11s 14ms/sample - loss: 0.1558 - acc: 0.9483 - f1: 0.7703 - precision: 0.6109 - val_loss: 0.5203 - val_acc: 0.9297 - val_f1: 0.4219 - val_precision: 0.3965

Epoch 34/100

640/753 [========================>.....] - ETA: 1s - loss: 0.2050 - acc: 0.9600 - f1: 0.8113 - precision: 0.6996

Epoch 00034: val_f1 did not improve from 0.58565

753/753 [==============================] - 11s 14ms/sample - loss: 0.1913 - acc: 0.9600 - f1: 0.7960 - precision: 0.6826 - val_loss: 0.5136 - val_acc: 0.8894 - val_f1: 0.4170 - val_precision: 0.2989

Epoch 35/100

640/753 [========================>.....] - ETA: 1s - loss: 0.2066 - acc: 0.9286 - f1: 0.7089 - precision: 0.5464

Epoch 00035: val_f1 did not improve from 0.58565

753/753 [==============================] - 10s 14ms/sample - loss: 0.1891 - acc: 0.9360 - f1: 0.7271 - precision: 0.5584 - val_loss: 0.4396 - val_acc: 0.9269 - val_f1: 0.4287 - val_precision: 0.3925

Epoch 36/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1601 - acc: 0.9583 - f1: 0.7980 - precision: 0.6796

Epoch 00036: val_f1 did not improve from 0.58565

753/753 [==============================] - 10s 14ms/sample - loss: 0.1476 - acc: 0.9607 - f1: 0.7982 - precision: 0.6783 - val_loss: 0.4820 - val_acc: 0.9197 - val_f1: 0.4863 - val_precision: 0.3710

Epoch 37/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1486 - acc: 0.9507 - f1: 0.7752 - precision: 0.6365

Epoch 00037: val_f1 did not improve from 0.58565

753/753 [==============================] - 11s 14ms/sample - loss: 0.1382 - acc: 0.9545 - f1: 0.7814 - precision: 0.6408 - val_loss: 0.4972 - val_acc: 0.9274 - val_f1: 0.4364 - val_precision: 0.3943

Epoch 38/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1395 - acc: 0.9684 - f1: 0.8379 - precision: 0.7417

Epoch 00038: val_f1 did not improve from 0.58565

753/753 [==============================] - 11s 15ms/sample - loss: 0.1305 - acc: 0.9692 - f1: 0.8310 - precision: 0.7329 - val_loss: 0.5471 - val_acc: 0.9167 - val_f1: 0.5373 - val_precision: 0.3608

Epoch 39/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1557 - acc: 0.9579 - f1: 0.8039 - precision: 0.6752

Epoch 00039: val_f1 did not improve from 0.58565

753/753 [==============================] - 10s 14ms/sample - loss: 0.1427 - acc: 0.9614 - f1: 0.8108 - precision: 0.6808 - val_loss: 0.5140 - val_acc: 0.9252 - val_f1: 0.4214 - val_precision: 0.3871

Epoch 40/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1403 - acc: 0.9549 - f1: 0.7917 - precision: 0.6567

Epoch 00040: val_f1 improved from 0.58565 to 0.61811, saving model to mp-dl-unh

753/753 [==============================] - 11s 14ms/sample - loss: 0.1295 - acc: 0.9590 - f1: 0.8019 - precision: 0.6646 - val_loss: 0.5232 - val_acc: 0.9301 - val_f1: 0.6181 - val_precision: 0.4024

Epoch 41/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1241 - acc: 0.9669 - f1: 0.8349 - precision: 0.7268

Epoch 00041: val_f1 did not improve from 0.61811

753/753 [==============================] - 11s 14ms/sample - loss: 0.1149 - acc: 0.9692 - f1: 0.8372 - precision: 0.7278 - val_loss: 0.5646 - val_acc: 0.9202 - val_f1: 0.5297 - val_precision: 0.3688

Epoch 42/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1113 - acc: 0.9679 - f1: 0.8384 - precision: 0.7304

Epoch 00042: val_f1 did not improve from 0.61811

753/753 [==============================] - 11s 14ms/sample - loss: 0.1038 - acc: 0.9704 - f1: 0.8435 - precision: 0.7343 - val_loss: 0.5828 - val_acc: 0.9263 - val_f1: 0.5464 - val_precision: 0.3863

Epoch 43/100

640/753 [========================>.....] - ETA: 1s - loss: 0.0992 - acc: 0.9745 - f1: 0.8704 - precision: 0.7740

Epoch 00043: val_f1 did not improve from 0.61811

753/753 [==============================] - 11s 14ms/sample - loss: 0.0928 - acc: 0.9761 - f1: 0.8715 - precision: 0.7748 - val_loss: 0.5917 - val_acc: 0.9313 - val_f1: 0.5702 - val_precision: 0.4053

Epoch 44/100

640/753 [========================>.....] - ETA: 1s - loss: 0.0955 - acc: 0.9770 - f1: 0.8823 - precision: 0.7930

Epoch 00044: val_f1 did not improve from 0.61811

753/753 [==============================] - 10s 14ms/sample - loss: 0.0893 - acc: 0.9782 - f1: 0.8808 - precision: 0.7913 - val_loss: 0.6317 - val_acc: 0.9255 - val_f1: 0.4839 - val_precision: 0.3868

Epoch 45/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1074 - acc: 0.9722 - f1: 0.8606 - precision: 0.7602

Epoch 00045: val_f1 did not improve from 0.61811

753/753 [==============================] - 10s 14ms/sample - loss: 0.0990 - acc: 0.9746 - f1: 0.8660 - precision: 0.7649 - val_loss: 0.6379 - val_acc: 0.9302 - val_f1: 0.5052 - val_precision: 0.4005

Epoch 46/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1209 - acc: 0.9640 - f1: 0.8311 - precision: 0.7056

Epoch 00046: val_f1 did not improve from 0.61811

753/753 [==============================] - 10s 14ms/sample - loss: 0.1117 - acc: 0.9664 - f1: 0.8319 - precision: 0.7069 - val_loss: 0.6452 - val_acc: 0.9338 - val_f1: 0.4438 - val_precision: 0.4144

Epoch 47/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1087 - acc: 0.9763 - f1: 0.8758 - precision: 0.7941

Epoch 00047: val_f1 did not improve from 0.61811

753/753 [==============================] - 11s 14ms/sample - loss: 0.1004 - acc: 0.9774 - f1: 0.8739 - precision: 0.7907 - val_loss: 0.6740 - val_acc: 0.9174 - val_f1: 0.5592 - val_precision: 0.3589

Epoch 48/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1176 - acc: 0.9667 - f1: 0.8328 - precision: 0.7232

Epoch 00048: val_f1 did not improve from 0.61811

753/753 [==============================] - 11s 14ms/sample - loss: 0.1074 - acc: 0.9698 - f1: 0.8419 - precision: 0.7300 - val_loss: 0.7245 - val_acc: 0.9103 - val_f1: 0.4316 - val_precision: 0.3335

Epoch 49/100

640/753 [========================>.....] - ETA: 1s - loss: 0.0990 - acc: 0.9761 - f1: 0.8772 - precision: 0.7874

Epoch 00049: val_f1 did not improve from 0.61811

753/753 [==============================] - 10s 14ms/sample - loss: 0.0921 - acc: 0.9774 - f1: 0.8755 - precision: 0.7854 - val_loss: 0.6579 - val_acc: 0.9159 - val_f1: 0.4782 - val_precision: 0.3560

Epoch 50/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1273 - acc: 0.9606 - f1: 0.8043 - precision: 0.6868

Epoch 00050: val_f1 did not improve from 0.61811

753/753 [==============================] - 10s 14ms/sample - loss: 0.1173 - acc: 0.9646 - f1: 0.8183 - precision: 0.6969 - val_loss: 0.6570 - val_acc: 0.9305 - val_f1: 0.4314 - val_precision: 0.3995

Epoch 51/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1187 - acc: 0.9750 - f1: 0.8755 - precision: 0.7838

Epoch 00051: val_f1 did not improve from 0.61811

753/753 [==============================] - 11s 14ms/sample - loss: 0.1125 - acc: 0.9751 - f1: 0.8645 - precision: 0.7723 - val_loss: 0.5983 - val_acc: 0.9080 - val_f1: 0.5348 - val_precision: 0.3320

Epoch 52/100

640/753 [========================>.....] - ETA: 1s - loss: 0.1176 - acc: 0.9691 - f1: 0.8425 - precision: 0.7401

Epoch 00052: val_f1 did not improve from 0.61811

753/753 [==============================] - 11s 14ms/sample - loss: 0.1075 - acc: 0.9715 - f1: 0.8479 - precision: 0.7436 - val_loss: 0.5963 - val_acc: 0.9281 - val_f1: 0.5786 - val_precision: 0.3919

Epoch 53/100

640/753 [========================>.....] - ETA: 1s - loss: 0.0958 - acc: 0.9731 - f1: 0.8657 - precision: 0.7643

Epoch 00053: val_f1 did not improve from 0.61811

753/753 [==============================] - 11s 14ms/sample - loss: 0.0890 - acc: 0.9754 - f1: 0.8711 - precision: 0.7693 - val_loss: 0.5994 - val_acc: 0.9329 - val_f1: 0.4421 - val_precision: 0.4104

Epoch 54/100

640/753 [========================>.....] - ETA: 1s - loss: 0.0904 - acc: 0.9796 - f1: 0.8945 - precision: 0.8134

Epoch 00054: val_f1 did not improve from 0.61811

753/753 [==============================] - 10s 14ms/sample - loss: 0.0837 - acc: 0.9807 - f1: 0.8931 - precision: 0.8118 - val_loss: 0.6191 - val_acc: 0.9318 - val_f1: 0.5887 - val_precision: 0.4080

Epoch 55/100

640/753 [========================>.....] - ETA: 1s - loss: 0.0798 - acc: 0.9788 - f1: 0.8889 - precision: 0.8035

Epoch 00055: val_f1 did not improve from 0.61811

753/753 [==============================] - 10s 14ms/sample - loss: 0.0743 - acc: 0.9801 - f1: 0.8897 - precision: 0.8038 - val_loss: 0.6557 - val_acc: 0.9358 - val_f1: 0.5383 - val_precision: 0.4236

Epoch 56/100

640/753 [========================>.....] - ETA: 1s - loss: 0.0775 - acc: 0.9822 - f1: 0.9072 - precision: 0.8325

Epoch 00056: val_f1 did not improve from 0.61811

753/753 [==============================] - 11s 14ms/sample - loss: 0.0720 - acc: 0.9832 - f1: 0.9067 - precision: 0.8317 - val_loss: 0.6965 - val_acc: 0.9321 - val_f1: 0.5790 - val_precision: 0.4076

Epoch 57/100

640/753 [========================>.....] - ETA: 1s - loss: 0.0784 - acc: 0.9786 - f1: 0.8890 - precision: 0.8014

Epoch 00057: val_f1 did not improve from 0.61811

753/753 [==============================] - 10s 14ms/sample - loss: 0.0743 - acc: 0.9804 - f1: 0.8933 - precision: 0.8066 - val_loss: 0.7530 - val_acc: 0.9334 - val_f1: 0.4333 - val_precision: 0.4096

Epoch 58/100

640/753 [========================>.....] - ETA: 1s - loss: 0.0754 - acc: 0.9807 - f1: 0.8987 - precision: 0.8184

Epoch 00058: val_f1 did not improve from 0.61811

753/753 [==============================] - 11s 14ms/sample - loss: 0.0704 - acc: 0.9816 - f1: 0.8968 - precision: 0.8163 - val_loss: 0.7244 - val_acc: 0.9340 - val_f1: 0.4407 - val_precision: 0.4142

Epoch 59/100

640/753 [========================>.....] - ETA: 1s - loss: 0.0727 - acc: 0.9844 - f1: 0.9139 - precision: 0.8513